The applications for AI and the possibilities ChatGPT has to offer are rapidly increasing. While these tools can be very useful, it is important to keep in mind important factors such as privacy, data protection and sustainability. This is why the Health Data Science core team has drawn up a set of rules.

Experimenting with ChatGPT and other generative AI products is easy; you simply create an account and off you go! You can now create any texts, documents and images you wish. Very useful, but there are risks associated with the use of these products.

For example, Amsterdam UMC has not agreed any contracts with the providers of AI products for the consumer market concerning what happens with Amsterdam UMC data. Amsterdam UMC is always responsible for the careful use of this data. When it comes to AI consumer products, however, we have no idea what happens to the data. We also do not know where this data is stored. It is therefore possible that Amsterdam UMC is in breach of laws and regulations as a result.

And yet, generative AI has a lot of potential. As a knowledge institute, that is something we need to investigate, as it applies for the following three categories of use: healthcare, business-sensitive information, and for experimenting with consumer applications such as ChatGPT.

Use in healthcare

EPIC and the EVA service centre are working together to introduce AI within EPIC to support healthcare processes. This is the only place where we are specifically allowed to work with healthcare-related data using AI.

To be clear: many of the AI initiatives already underway at Amsterdam UMC for specific healthcare applications do not use generative AI products. These applications often use AI or algorithms developed within a controlled Amsterdam UMC environment. The Health Data Science core team has drawn up frameworks for this.

Playground for business-sensitive information

For the development of AI applications outside the healthcare domain, the ICT service department will be setting up a ‘playground’. This controlled and safe environment is suitable for experimenting with business-sensitive information. Examples include further automating data analyses, establishing connections between various documents and databases, the automation of minute-taking in meetings and writing and editing programming code. Once the playground is available, you will be able to find out how you can register via the various internal channels and on the theme page of Data-driven work. This is a pilot programme, so the number of participants is limited.

Rules for using ChatGPT

Would you still like to use an AI product for the consumer market such as ChatGPT in your work for Amsterdam UMC? Then keep in mind that these products are still being further developed. They have not been specifically designed for use in healthcare or research. The results from a chat with a generative AI have not been checked for accuracy. It is wise to take the following rules into account when using these products in your work:

- Do not share data that is not accessible from the public domain or a public source. This means no personal data (anything that can be traced back to a person, including images), no business-sensitive information and definitely no patient information.

- Results should be thoroughly checked for potential biases and factually incorrect statements. Generative AI products in the consumer market, such as ChatGPT, are language models and not knowledge models.

- Results could potentially violate the intellectual property rights of third parties. This is because the model has been trained using a large amount of data, taken from the internet, without taking copyright into account. There are currently many legal proceedings underway, filed by authors, publishers and other parties.

- As a result, any results may not simply be used in documents we publish and that bind us to legal obligations.

- Generative AI is not suitable for critical processes such as individual patient care or processes that include financial transactions.

Not sustainable

The application of generative AI is still in its infancy. It is important to experiment with possible applications, taking into account the associated risks. Another aspect to take into account is the amount of energy needed to work with AI due to the significant computing capacity required. Working with AI is not sustainable. So make sure you have a clear goal in mind when you experiment, and be conscientious.

Useful tip number 1: do not share data

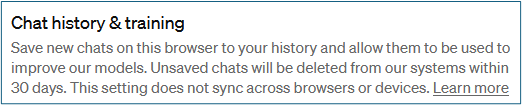

The paid version of ChatGPT has settings to prevent your data from being used to train the AI. It is advisable to turn this setting on. The setting in question is the following:

Useful tip number 2: a good prompt

Once you get to work using a generative AI, it is good to know that the quality of the question greatly impacts the quality of the answer. That’s why it’s important to write a good prompt. Below is a list of recommendations that can help you do so:

- Describe your target audience (for example management, students, colleagues, patients).

- Describe the language level of the target audience (A1/A2 beginner, B1/B2 intermediate, C1/C2 advanced).

- Describe the goal of the answer (for example to inform, entertain, convince).

- Describe the format of the answer (for example bullet-points, dialogue, folder).

- Describe the perspective of the answer (for example enthusiastic, critical, expert).

- Set parameters such as the number of words for the answer.

- Ask for multiple points of view and positions.